mirror of

https://github.com/Raghu-Ch/nodeRestAPI.git

synced 2026-02-10 12:43:02 -05:00

initial commit

This commit is contained in:

15

node_modules/readdirp/.npmignore

generated

vendored

Normal file

15

node_modules/readdirp/.npmignore

generated

vendored

Normal file

@@ -0,0 +1,15 @@

|

||||

lib-cov

|

||||

*.seed

|

||||

*.log

|

||||

*.csv

|

||||

*.dat

|

||||

*.out

|

||||

*.pid

|

||||

*.gz

|

||||

|

||||

pids

|

||||

logs

|

||||

results

|

||||

|

||||

node_modules

|

||||

npm-debug.log

|

||||

6

node_modules/readdirp/.travis.yml

generated

vendored

Normal file

6

node_modules/readdirp/.travis.yml

generated

vendored

Normal file

@@ -0,0 +1,6 @@

|

||||

language: node_js

|

||||

node_js:

|

||||

- "0.10"

|

||||

- "0.12"

|

||||

- "4.4"

|

||||

- "6.2"

|

||||

20

node_modules/readdirp/LICENSE

generated

vendored

Normal file

20

node_modules/readdirp/LICENSE

generated

vendored

Normal file

@@ -0,0 +1,20 @@

|

||||

This software is released under the MIT license:

|

||||

|

||||

Copyright (c) 2012-2015 Thorsten Lorenz

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy of

|

||||

this software and associated documentation files (the "Software"), to deal in

|

||||

the Software without restriction, including without limitation the rights to

|

||||

use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of

|

||||

the Software, and to permit persons to whom the Software is furnished to do so,

|

||||

subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS

|

||||

FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR

|

||||

COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER

|

||||

IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN

|

||||

CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

||||

233

node_modules/readdirp/README.md

generated

vendored

Normal file

233

node_modules/readdirp/README.md

generated

vendored

Normal file

@@ -0,0 +1,233 @@

|

||||

# readdirp [](http://travis-ci.org/thlorenz/readdirp)

|

||||

|

||||

[](https://nodei.co/npm/readdirp/)

|

||||

|

||||

Recursive version of [fs.readdir](http://nodejs.org/docs/latest/api/fs.html#fs_fs_readdir_path_callback). Exposes a **stream api**.

|

||||

|

||||

```javascript

|

||||

var readdirp = require('readdirp')

|

||||

, path = require('path')

|

||||

, es = require('event-stream');

|

||||

|

||||

// print out all JavaScript files along with their size

|

||||

|

||||

var stream = readdirp({ root: path.join(__dirname), fileFilter: '*.js' });

|

||||

stream

|

||||

.on('warn', function (err) {

|

||||

console.error('non-fatal error', err);

|

||||

// optionally call stream.destroy() here in order to abort and cause 'close' to be emitted

|

||||

})

|

||||

.on('error', function (err) { console.error('fatal error', err); })

|

||||

.pipe(es.mapSync(function (entry) {

|

||||

return { path: entry.path, size: entry.stat.size };

|

||||

}))

|

||||

.pipe(es.stringify())

|

||||

.pipe(process.stdout);

|

||||

```

|

||||

|

||||

Meant to be one of the recursive versions of [fs](http://nodejs.org/docs/latest/api/fs.html) functions, e.g., like [mkdirp](https://github.com/substack/node-mkdirp).

|

||||

|

||||

**Table of Contents** *generated with [DocToc](http://doctoc.herokuapp.com/)*

|

||||

|

||||

- [Installation](#installation)

|

||||

- [API](#api)

|

||||

- [entry stream](#entry-stream)

|

||||

- [options](#options)

|

||||

- [entry info](#entry-info)

|

||||

- [Filters](#filters)

|

||||

- [Callback API](#callback-api)

|

||||

- [allProcessed ](#allprocessed)

|

||||

- [fileProcessed](#fileprocessed)

|

||||

- [More Examples](#more-examples)

|

||||

- [stream api](#stream-api)

|

||||

- [stream api pipe](#stream-api-pipe)

|

||||

- [grep](#grep)

|

||||

- [using callback api](#using-callback-api)

|

||||

- [tests](#tests)

|

||||

|

||||

|

||||

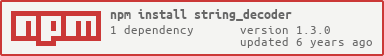

# Installation

|

||||

|

||||

npm install readdirp

|

||||

|

||||

# API

|

||||

|

||||

***var entryStream = readdirp (options)***

|

||||

|

||||

Reads given root recursively and returns a `stream` of [entry info](#entry-info)s.

|

||||

|

||||

## entry stream

|

||||

|

||||

Behaves as follows:

|

||||

|

||||

- `emit('data')` passes an [entry info](#entry-info) whenever one is found

|

||||

- `emit('warn')` passes a non-fatal `Error` that prevents a file/directory from being processed (i.e., if it is

|

||||

inaccessible to the user)

|

||||

- `emit('error')` passes a fatal `Error` which also ends the stream (i.e., when illegal options where passed)

|

||||

- `emit('end')` called when all entries were found and no more will be emitted (i.e., we are done)

|

||||

- `emit('close')` called when the stream is destroyed via `stream.destroy()` (which could be useful if you want to

|

||||

manually abort even on a non fatal error) - at that point the stream is no longer `readable` and no more entries,

|

||||

warning or errors are emitted

|

||||

- to learn more about streams, consult the very detailed

|

||||

[nodejs streams documentation](http://nodejs.org/api/stream.html) or the

|

||||

[stream-handbook](https://github.com/substack/stream-handbook)

|

||||

|

||||

|

||||

## options

|

||||

|

||||

- **root**: path in which to start reading and recursing into subdirectories

|

||||

|

||||

- **fileFilter**: filter to include/exclude files found (see [Filters](#filters) for more)

|

||||

|

||||

- **directoryFilter**: filter to include/exclude directories found and to recurse into (see [Filters](#filters) for more)

|

||||

|

||||

- **depth**: depth at which to stop recursing even if more subdirectories are found

|

||||

|

||||

- **entryType**: determines if data events on the stream should be emitted for `'files'`, `'directories'`, `'both'`, or `'all'`. Setting to `'all'` will also include entries for other types of file descriptors like character devices, unix sockets and named pipes. Defaults to `'files'`.

|

||||

|

||||

- **lstat**: if `true`, readdirp uses `fs.lstat` instead of `fs.stat` in order to stat files and includes symlink entries in the stream along with files.

|

||||

|

||||

## entry info

|

||||

|

||||

Has the following properties:

|

||||

|

||||

- **parentDir** : directory in which entry was found (relative to given root)

|

||||

- **fullParentDir** : full path to parent directory

|

||||

- **name** : name of the file/directory

|

||||

- **path** : path to the file/directory (relative to given root)

|

||||

- **fullPath** : full path to the file/directory found

|

||||

- **stat** : built in [stat object](http://nodejs.org/docs/v0.4.9/api/fs.html#fs.Stats)

|

||||

- **Example**: (assuming root was `/User/dev/readdirp`)

|

||||

|

||||

parentDir : 'test/bed/root_dir1',

|

||||

fullParentDir : '/User/dev/readdirp/test/bed/root_dir1',

|

||||

name : 'root_dir1_subdir1',

|

||||

path : 'test/bed/root_dir1/root_dir1_subdir1',

|

||||

fullPath : '/User/dev/readdirp/test/bed/root_dir1/root_dir1_subdir1',

|

||||

stat : [ ... ]

|

||||

|

||||

## Filters

|

||||

|

||||

There are three different ways to specify filters for files and directories respectively.

|

||||

|

||||

- **function**: a function that takes an entry info as a parameter and returns true to include or false to exclude the entry

|

||||

|

||||

- **glob string**: a string (e.g., `*.js`) which is matched using [minimatch](https://github.com/isaacs/minimatch), so go there for more

|

||||

information.

|

||||

|

||||

Globstars (`**`) are not supported since specifiying a recursive pattern for an already recursive function doesn't make sense.

|

||||

|

||||

Negated globs (as explained in the minimatch documentation) are allowed, e.g., `!*.txt` matches everything but text files.

|

||||

|

||||

- **array of glob strings**: either need to be all inclusive or all exclusive (negated) patterns otherwise an error is thrown.

|

||||

|

||||

`[ '*.json', '*.js' ]` includes all JavaScript and Json files.

|

||||

|

||||

|

||||

`[ '!.git', '!node_modules' ]` includes all directories except the '.git' and 'node_modules'.

|

||||

|

||||

Directories that do not pass a filter will not be recursed into.

|

||||

|

||||

## Callback API

|

||||

|

||||

Although the stream api is recommended, readdirp also exposes a callback based api.

|

||||

|

||||

***readdirp (options, callback1 [, callback2])***

|

||||

|

||||

If callback2 is given, callback1 functions as the **fileProcessed** callback, and callback2 as the **allProcessed** callback.

|

||||

|

||||

If only callback1 is given, it functions as the **allProcessed** callback.

|

||||

|

||||

### allProcessed

|

||||

|

||||

- function with err and res parameters, e.g., `function (err, res) { ... }`

|

||||

- **err**: array of errors that occurred during the operation, **res may still be present, even if errors occurred**

|

||||

- **res**: collection of file/directory [entry infos](#entry-info)

|

||||

|

||||

### fileProcessed

|

||||

|

||||

- function with [entry info](#entry-info) parameter e.g., `function (entryInfo) { ... }`

|

||||

|

||||

|

||||

# More Examples

|

||||

|

||||

`on('error', ..)`, `on('warn', ..)` and `on('end', ..)` handling omitted for brevity

|

||||

|

||||

```javascript

|

||||

var readdirp = require('readdirp');

|

||||

|

||||

// Glob file filter

|

||||

readdirp({ root: './test/bed', fileFilter: '*.js' })

|

||||

.on('data', function (entry) {

|

||||

// do something with each JavaScript file entry

|

||||

});

|

||||

|

||||

// Combined glob file filters

|

||||

readdirp({ root: './test/bed', fileFilter: [ '*.js', '*.json' ] })

|

||||

.on('data', function (entry) {

|

||||

// do something with each JavaScript and Json file entry

|

||||

});

|

||||

|

||||

// Combined negated directory filters

|

||||

readdirp({ root: './test/bed', directoryFilter: [ '!.git', '!*modules' ] })

|

||||

.on('data', function (entry) {

|

||||

// do something with each file entry found outside '.git' or any modules directory

|

||||

});

|

||||

|

||||

// Function directory filter

|

||||

readdirp({ root: './test/bed', directoryFilter: function (di) { return di.name.length === 9; } })

|

||||

.on('data', function (entry) {

|

||||

// do something with each file entry found inside directories whose name has length 9

|

||||

});

|

||||

|

||||

// Limiting depth

|

||||

readdirp({ root: './test/bed', depth: 1 })

|

||||

.on('data', function (entry) {

|

||||

// do something with each file entry found up to 1 subdirectory deep

|

||||

});

|

||||

|

||||

// callback api

|

||||

readdirp(

|

||||

{ root: '.' }

|

||||

, function(fileInfo) {

|

||||

// do something with file entry here

|

||||

}

|

||||

, function (err, res) {

|

||||

// all done, move on or do final step for all file entries here

|

||||

}

|

||||

);

|

||||

```

|

||||

|

||||

Try more examples by following [instructions](https://github.com/thlorenz/readdirp/blob/master/examples/Readme.md)

|

||||

on how to get going.

|

||||

|

||||

## stream api

|

||||

|

||||

[stream-api.js](https://github.com/thlorenz/readdirp/blob/master/examples/stream-api.js)

|

||||

|

||||

Demonstrates error and data handling by listening to events emitted from the readdirp stream.

|

||||

|

||||

## stream api pipe

|

||||

|

||||

[stream-api-pipe.js](https://github.com/thlorenz/readdirp/blob/master/examples/stream-api-pipe.js)

|

||||

|

||||

Demonstrates error handling by listening to events emitted from the readdirp stream and how to pipe the data stream into

|

||||

another destination stream.

|

||||

|

||||

## grep

|

||||

|

||||

[grep.js](https://github.com/thlorenz/readdirp/blob/master/examples/grep.js)

|

||||

|

||||

Very naive implementation of grep, for demonstration purposes only.

|

||||

|

||||

## using callback api

|

||||

|

||||

[callback-api.js](https://github.com/thlorenz/readdirp/blob/master/examples/callback-api.js)

|

||||

|

||||

Shows how to pass callbacks in order to handle errors and/or data.

|

||||

|

||||

## tests

|

||||

|

||||

The [readdirp tests](https://github.com/thlorenz/readdirp/blob/master/test/readdirp.js) also will give you a good idea on

|

||||

how things work.

|

||||

|

||||

37

node_modules/readdirp/examples/Readme.md

generated

vendored

Normal file

37

node_modules/readdirp/examples/Readme.md

generated

vendored

Normal file

@@ -0,0 +1,37 @@

|

||||

# readdirp examples

|

||||

|

||||

## How to run the examples

|

||||

|

||||

Assuming you installed readdirp (`npm install readdirp`), you can do the following:

|

||||

|

||||

1. `npm explore readdirp`

|

||||

2. `cd examples`

|

||||

3. `npm install`

|

||||

|

||||

At that point you can run the examples with node, i.e., `node grep`.

|

||||

|

||||

## stream api

|

||||

|

||||

[stream-api.js](https://github.com/thlorenz/readdirp/blob/master/examples/stream-api.js)

|

||||

|

||||

Demonstrates error and data handling by listening to events emitted from the readdirp stream.

|

||||

|

||||

## stream api pipe

|

||||

|

||||

[stream-api-pipe.js](https://github.com/thlorenz/readdirp/blob/master/examples/stream-api-pipe.js)

|

||||

|

||||

Demonstrates error handling by listening to events emitted from the readdirp stream and how to pipe the data stream into

|

||||

another destination stream.

|

||||

|

||||

## grep

|

||||

|

||||

[grep.js](https://github.com/thlorenz/readdirp/blob/master/examples/grep.js)

|

||||

|

||||

Very naive implementation of grep, for demonstration purposes only.

|

||||

|

||||

## using callback api

|

||||

|

||||

[callback-api.js](https://github.com/thlorenz/readdirp/blob/master/examples/callback-api.js)

|

||||

|

||||

Shows how to pass callbacks in order to handle errors and/or data.

|

||||

|

||||

10

node_modules/readdirp/examples/callback-api.js

generated

vendored

Normal file

10

node_modules/readdirp/examples/callback-api.js

generated

vendored

Normal file

@@ -0,0 +1,10 @@

|

||||

var readdirp = require('..');

|

||||

|

||||

readdirp({ root: '.', fileFilter: '*.js' }, function (errors, res) {

|

||||

if (errors) {

|

||||

errors.forEach(function (err) {

|

||||

console.error('Error: ', err);

|

||||

});

|

||||

}

|

||||

console.log('all javascript files', res);

|

||||

});

|

||||

71

node_modules/readdirp/examples/grep.js

generated

vendored

Normal file

71

node_modules/readdirp/examples/grep.js

generated

vendored

Normal file

@@ -0,0 +1,71 @@

|

||||

'use strict';

|

||||

var readdirp = require('..')

|

||||

, util = require('util')

|

||||

, fs = require('fs')

|

||||

, path = require('path')

|

||||

, es = require('event-stream')

|

||||

;

|

||||

|

||||

function findLinesMatching (searchTerm) {

|

||||

|

||||

return es.through(function (entry) {

|

||||

var lineno = 0

|

||||

, matchingLines = []

|

||||

, fileStream = this;

|

||||

|

||||

function filter () {

|

||||

return es.mapSync(function (line) {

|

||||

lineno++;

|

||||

return ~line.indexOf(searchTerm) ? lineno + ': ' + line : undefined;

|

||||

});

|

||||

}

|

||||

|

||||

function aggregate () {

|

||||

return es.through(

|

||||

function write (data) {

|

||||

matchingLines.push(data);

|

||||

}

|

||||

, function end () {

|

||||

|

||||

// drop files that had no matches

|

||||

if (matchingLines.length) {

|

||||

var result = { file: entry, lines: matchingLines };

|

||||

|

||||

// pass result on to file stream

|

||||

fileStream.emit('data', result);

|

||||

}

|

||||

this.emit('end');

|

||||

}

|

||||

);

|

||||

}

|

||||

|

||||

fs.createReadStream(entry.fullPath, { encoding: 'utf-8' })

|

||||

|

||||

// handle file contents line by line

|

||||

.pipe(es.split('\n'))

|

||||

|

||||

// keep only the lines that matched the term

|

||||

.pipe(filter())

|

||||

|

||||

// aggregate all matching lines and delegate control back to the file stream

|

||||

.pipe(aggregate())

|

||||

;

|

||||

});

|

||||

}

|

||||

|

||||

console.log('grepping for "arguments"');

|

||||

|

||||

// create a stream of all javascript files found in this and all sub directories

|

||||

readdirp({ root: path.join(__dirname), fileFilter: '*.js' })

|

||||

|

||||

// find all lines matching the term for each file (if none found, that file is ignored)

|

||||

.pipe(findLinesMatching('arguments'))

|

||||

|

||||

// format the results and output

|

||||

.pipe(

|

||||

es.mapSync(function (res) {

|

||||

return '\n\n' + res.file.path + '\n\t' + res.lines.join('\n\t');

|

||||

})

|

||||

)

|

||||

.pipe(process.stdout)

|

||||

;

|

||||

9

node_modules/readdirp/examples/package.json

generated

vendored

Normal file

9

node_modules/readdirp/examples/package.json

generated

vendored

Normal file

@@ -0,0 +1,9 @@

|

||||

{

|

||||

"name": "readdirp-examples",

|

||||

"version": "0.0.0",

|

||||

"description": "Examples for readdirp.",

|

||||

"dependencies": {

|

||||

"tap-stream": "~0.1.0",

|

||||

"event-stream": "~3.0.7"

|

||||

}

|

||||

}

|

||||

19

node_modules/readdirp/examples/stream-api-pipe.js

generated

vendored

Normal file

19

node_modules/readdirp/examples/stream-api-pipe.js

generated

vendored

Normal file

@@ -0,0 +1,19 @@

|

||||

var readdirp = require('..')

|

||||

, path = require('path')

|

||||

, through = require('through2')

|

||||

|

||||

// print out all JavaScript files along with their size

|

||||

readdirp({ root: path.join(__dirname), fileFilter: '*.js' })

|

||||

.on('warn', function (err) { console.error('non-fatal error', err); })

|

||||

.on('error', function (err) { console.error('fatal error', err); })

|

||||

.pipe(through.obj(function (entry, _, cb) {

|

||||

this.push({ path: entry.path, size: entry.stat.size });

|

||||

cb();

|

||||

}))

|

||||

.pipe(through.obj(

|

||||

function (res, _, cb) {

|

||||

this.push(JSON.stringify(res) + '\n');

|

||||

cb();

|

||||

})

|

||||

)

|

||||

.pipe(process.stdout);

|

||||

15

node_modules/readdirp/examples/stream-api.js

generated

vendored

Normal file

15

node_modules/readdirp/examples/stream-api.js

generated

vendored

Normal file

@@ -0,0 +1,15 @@

|

||||

var readdirp = require('..')

|

||||

, path = require('path');

|

||||

|

||||

readdirp({ root: path.join(__dirname), fileFilter: '*.js' })

|

||||

.on('warn', function (err) {

|

||||

console.error('something went wrong when processing an entry', err);

|

||||

})

|

||||

.on('error', function (err) {

|

||||

console.error('something went fatally wrong and the stream was aborted', err);

|

||||

})

|

||||

.on('data', function (entry) {

|

||||

console.log('%s is ready for processing', entry.path);

|

||||

// process entry here

|

||||

});

|

||||

|

||||

15

node_modules/readdirp/node_modules/graceful-fs/LICENSE

generated

vendored

Normal file

15

node_modules/readdirp/node_modules/graceful-fs/LICENSE

generated

vendored

Normal file

@@ -0,0 +1,15 @@

|

||||

The ISC License

|

||||

|

||||

Copyright (c) Isaac Z. Schlueter, Ben Noordhuis, and Contributors

|

||||

|

||||

Permission to use, copy, modify, and/or distribute this software for any

|

||||

purpose with or without fee is hereby granted, provided that the above

|

||||

copyright notice and this permission notice appear in all copies.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS" AND THE AUTHOR DISCLAIMS ALL WARRANTIES

|

||||

WITH REGARD TO THIS SOFTWARE INCLUDING ALL IMPLIED WARRANTIES OF

|

||||

MERCHANTABILITY AND FITNESS. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR

|

||||

ANY SPECIAL, DIRECT, INDIRECT, OR CONSEQUENTIAL DAMAGES OR ANY DAMAGES

|

||||

WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN

|

||||

ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF OR

|

||||

IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

|

||||

133

node_modules/readdirp/node_modules/graceful-fs/README.md

generated

vendored

Normal file

133

node_modules/readdirp/node_modules/graceful-fs/README.md

generated

vendored

Normal file

@@ -0,0 +1,133 @@

|

||||

# graceful-fs

|

||||

|

||||

graceful-fs functions as a drop-in replacement for the fs module,

|

||||

making various improvements.

|

||||

|

||||

The improvements are meant to normalize behavior across different

|

||||

platforms and environments, and to make filesystem access more

|

||||

resilient to errors.

|

||||

|

||||

## Improvements over [fs module](https://nodejs.org/api/fs.html)

|

||||

|

||||

* Queues up `open` and `readdir` calls, and retries them once

|

||||

something closes if there is an EMFILE error from too many file

|

||||

descriptors.

|

||||

* fixes `lchmod` for Node versions prior to 0.6.2.

|

||||

* implements `fs.lutimes` if possible. Otherwise it becomes a noop.

|

||||

* ignores `EINVAL` and `EPERM` errors in `chown`, `fchown` or

|

||||

`lchown` if the user isn't root.

|

||||

* makes `lchmod` and `lchown` become noops, if not available.

|

||||

* retries reading a file if `read` results in EAGAIN error.

|

||||

|

||||

On Windows, it retries renaming a file for up to one second if `EACCESS`

|

||||

or `EPERM` error occurs, likely because antivirus software has locked

|

||||

the directory.

|

||||

|

||||

## USAGE

|

||||

|

||||

```javascript

|

||||

// use just like fs

|

||||

var fs = require('graceful-fs')

|

||||

|

||||

// now go and do stuff with it...

|

||||

fs.readFileSync('some-file-or-whatever')

|

||||

```

|

||||

|

||||

## Global Patching

|

||||

|

||||

If you want to patch the global fs module (or any other fs-like

|

||||

module) you can do this:

|

||||

|

||||

```javascript

|

||||

// Make sure to read the caveat below.

|

||||

var realFs = require('fs')

|

||||

var gracefulFs = require('graceful-fs')

|

||||

gracefulFs.gracefulify(realFs)

|

||||

```

|

||||

|

||||

This should only ever be done at the top-level application layer, in

|

||||

order to delay on EMFILE errors from any fs-using dependencies. You

|

||||

should **not** do this in a library, because it can cause unexpected

|

||||

delays in other parts of the program.

|

||||

|

||||

## Changes

|

||||

|

||||

This module is fairly stable at this point, and used by a lot of

|

||||

things. That being said, because it implements a subtle behavior

|

||||

change in a core part of the node API, even modest changes can be

|

||||

extremely breaking, and the versioning is thus biased towards

|

||||

bumping the major when in doubt.

|

||||

|

||||

The main change between major versions has been switching between

|

||||

providing a fully-patched `fs` module vs monkey-patching the node core

|

||||

builtin, and the approach by which a non-monkey-patched `fs` was

|

||||

created.

|

||||

|

||||

The goal is to trade `EMFILE` errors for slower fs operations. So, if

|

||||

you try to open a zillion files, rather than crashing, `open`

|

||||

operations will be queued up and wait for something else to `close`.

|

||||

|

||||

There are advantages to each approach. Monkey-patching the fs means

|

||||

that no `EMFILE` errors can possibly occur anywhere in your

|

||||

application, because everything is using the same core `fs` module,

|

||||

which is patched. However, it can also obviously cause undesirable

|

||||

side-effects, especially if the module is loaded multiple times.

|

||||

|

||||

Implementing a separate-but-identical patched `fs` module is more

|

||||

surgical (and doesn't run the risk of patching multiple times), but

|

||||

also imposes the challenge of keeping in sync with the core module.

|

||||

|

||||

The current approach loads the `fs` module, and then creates a

|

||||

lookalike object that has all the same methods, except a few that are

|

||||

patched. It is safe to use in all versions of Node from 0.8 through

|

||||

7.0.

|

||||

|

||||

### v4

|

||||

|

||||

* Do not monkey-patch the fs module. This module may now be used as a

|

||||

drop-in dep, and users can opt into monkey-patching the fs builtin

|

||||

if their app requires it.

|

||||

|

||||

### v3

|

||||

|

||||

* Monkey-patch fs, because the eval approach no longer works on recent

|

||||

node.

|

||||

* fixed possible type-error throw if rename fails on windows

|

||||

* verify that we *never* get EMFILE errors

|

||||

* Ignore ENOSYS from chmod/chown

|

||||

* clarify that graceful-fs must be used as a drop-in

|

||||

|

||||

### v2.1.0

|

||||

|

||||

* Use eval rather than monkey-patching fs.

|

||||

* readdir: Always sort the results

|

||||

* win32: requeue a file if error has an OK status

|

||||

|

||||

### v2.0

|

||||

|

||||

* A return to monkey patching

|

||||

* wrap process.cwd

|

||||

|

||||

### v1.1

|

||||

|

||||

* wrap readFile

|

||||

* Wrap fs.writeFile.

|

||||

* readdir protection

|

||||

* Don't clobber the fs builtin

|

||||

* Handle fs.read EAGAIN errors by trying again

|

||||

* Expose the curOpen counter

|

||||

* No-op lchown/lchmod if not implemented

|

||||

* fs.rename patch only for win32

|

||||

* Patch fs.rename to handle AV software on Windows

|

||||

* Close #4 Chown should not fail on einval or eperm if non-root

|

||||

* Fix isaacs/fstream#1 Only wrap fs one time

|

||||

* Fix #3 Start at 1024 max files, then back off on EMFILE

|

||||

* lutimes that doens't blow up on Linux

|

||||

* A full on-rewrite using a queue instead of just swallowing the EMFILE error

|

||||

* Wrap Read/Write streams as well

|

||||

|

||||

### 1.0

|

||||

|

||||

* Update engines for node 0.6

|

||||

* Be lstat-graceful on Windows

|

||||

* first

|

||||

21

node_modules/readdirp/node_modules/graceful-fs/fs.js

generated

vendored

Normal file

21

node_modules/readdirp/node_modules/graceful-fs/fs.js

generated

vendored

Normal file

@@ -0,0 +1,21 @@

|

||||

'use strict'

|

||||

|

||||

var fs = require('fs')

|

||||

|

||||

module.exports = clone(fs)

|

||||

|

||||

function clone (obj) {

|

||||

if (obj === null || typeof obj !== 'object')

|

||||

return obj

|

||||

|

||||

if (obj instanceof Object)

|

||||

var copy = { __proto__: obj.__proto__ }

|

||||

else

|

||||

var copy = Object.create(null)

|

||||

|

||||

Object.getOwnPropertyNames(obj).forEach(function (key) {

|

||||

Object.defineProperty(copy, key, Object.getOwnPropertyDescriptor(obj, key))

|

||||

})

|

||||

|

||||

return copy

|

||||

}

|

||||

262

node_modules/readdirp/node_modules/graceful-fs/graceful-fs.js

generated

vendored

Normal file

262

node_modules/readdirp/node_modules/graceful-fs/graceful-fs.js

generated

vendored

Normal file

@@ -0,0 +1,262 @@

|

||||

var fs = require('fs')

|

||||

var polyfills = require('./polyfills.js')

|

||||

var legacy = require('./legacy-streams.js')

|

||||

var queue = []

|

||||

|

||||

var util = require('util')

|

||||

|

||||

function noop () {}

|

||||

|

||||

var debug = noop

|

||||

if (util.debuglog)

|

||||

debug = util.debuglog('gfs4')

|

||||

else if (/\bgfs4\b/i.test(process.env.NODE_DEBUG || ''))

|

||||

debug = function() {

|

||||

var m = util.format.apply(util, arguments)

|

||||

m = 'GFS4: ' + m.split(/\n/).join('\nGFS4: ')

|

||||

console.error(m)

|

||||

}

|

||||

|

||||

if (/\bgfs4\b/i.test(process.env.NODE_DEBUG || '')) {

|

||||

process.on('exit', function() {

|

||||

debug(queue)

|

||||

require('assert').equal(queue.length, 0)

|

||||

})

|

||||

}

|

||||

|

||||

module.exports = patch(require('./fs.js'))

|

||||

if (process.env.TEST_GRACEFUL_FS_GLOBAL_PATCH) {

|

||||

module.exports = patch(fs)

|

||||

}

|

||||

|

||||

// Always patch fs.close/closeSync, because we want to

|

||||

// retry() whenever a close happens *anywhere* in the program.

|

||||

// This is essential when multiple graceful-fs instances are

|

||||

// in play at the same time.

|

||||

module.exports.close =

|

||||

fs.close = (function (fs$close) { return function (fd, cb) {

|

||||

return fs$close.call(fs, fd, function (err) {

|

||||

if (!err)

|

||||

retry()

|

||||

|

||||

if (typeof cb === 'function')

|

||||

cb.apply(this, arguments)

|

||||

})

|

||||

}})(fs.close)

|

||||

|

||||

module.exports.closeSync =

|

||||

fs.closeSync = (function (fs$closeSync) { return function (fd) {

|

||||

// Note that graceful-fs also retries when fs.closeSync() fails.

|

||||

// Looks like a bug to me, although it's probably a harmless one.

|

||||

var rval = fs$closeSync.apply(fs, arguments)

|

||||

retry()

|

||||

return rval

|

||||

}})(fs.closeSync)

|

||||

|

||||

function patch (fs) {

|

||||

// Everything that references the open() function needs to be in here

|

||||

polyfills(fs)

|

||||

fs.gracefulify = patch

|

||||

fs.FileReadStream = ReadStream; // Legacy name.

|

||||

fs.FileWriteStream = WriteStream; // Legacy name.

|

||||

fs.createReadStream = createReadStream

|

||||

fs.createWriteStream = createWriteStream

|

||||

var fs$readFile = fs.readFile

|

||||

fs.readFile = readFile

|

||||

function readFile (path, options, cb) {

|

||||

if (typeof options === 'function')

|

||||

cb = options, options = null

|

||||

|

||||

return go$readFile(path, options, cb)

|

||||

|

||||

function go$readFile (path, options, cb) {

|

||||

return fs$readFile(path, options, function (err) {

|

||||

if (err && (err.code === 'EMFILE' || err.code === 'ENFILE'))

|

||||

enqueue([go$readFile, [path, options, cb]])

|

||||

else {

|

||||

if (typeof cb === 'function')

|

||||

cb.apply(this, arguments)

|

||||

retry()

|

||||

}

|

||||

})

|

||||

}

|

||||

}

|

||||

|

||||

var fs$writeFile = fs.writeFile

|

||||

fs.writeFile = writeFile

|

||||

function writeFile (path, data, options, cb) {

|

||||

if (typeof options === 'function')

|

||||

cb = options, options = null

|

||||

|

||||

return go$writeFile(path, data, options, cb)

|

||||

|

||||

function go$writeFile (path, data, options, cb) {

|

||||

return fs$writeFile(path, data, options, function (err) {

|

||||

if (err && (err.code === 'EMFILE' || err.code === 'ENFILE'))

|

||||

enqueue([go$writeFile, [path, data, options, cb]])

|

||||

else {

|

||||

if (typeof cb === 'function')

|

||||

cb.apply(this, arguments)

|

||||

retry()

|

||||

}

|

||||

})

|

||||

}

|

||||

}

|

||||

|

||||

var fs$appendFile = fs.appendFile

|

||||

if (fs$appendFile)

|

||||

fs.appendFile = appendFile

|

||||

function appendFile (path, data, options, cb) {

|

||||

if (typeof options === 'function')

|

||||

cb = options, options = null

|

||||

|

||||

return go$appendFile(path, data, options, cb)

|

||||

|

||||

function go$appendFile (path, data, options, cb) {

|

||||

return fs$appendFile(path, data, options, function (err) {

|

||||

if (err && (err.code === 'EMFILE' || err.code === 'ENFILE'))

|

||||

enqueue([go$appendFile, [path, data, options, cb]])

|

||||

else {

|

||||

if (typeof cb === 'function')

|

||||

cb.apply(this, arguments)

|

||||

retry()

|

||||

}

|

||||

})

|

||||

}

|

||||

}

|

||||

|

||||

var fs$readdir = fs.readdir

|

||||

fs.readdir = readdir

|

||||

function readdir (path, options, cb) {

|

||||

var args = [path]

|

||||

if (typeof options !== 'function') {

|

||||

args.push(options)

|

||||

} else {

|

||||

cb = options

|

||||

}

|

||||

args.push(go$readdir$cb)

|

||||

|

||||

return go$readdir(args)

|

||||

|

||||

function go$readdir$cb (err, files) {

|

||||

if (files && files.sort)

|

||||

files.sort()

|

||||

|

||||

if (err && (err.code === 'EMFILE' || err.code === 'ENFILE'))

|

||||

enqueue([go$readdir, [args]])

|

||||

else {

|

||||

if (typeof cb === 'function')

|

||||

cb.apply(this, arguments)

|

||||

retry()

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

function go$readdir (args) {

|

||||

return fs$readdir.apply(fs, args)

|

||||

}

|

||||

|

||||

if (process.version.substr(0, 4) === 'v0.8') {

|

||||

var legStreams = legacy(fs)

|

||||

ReadStream = legStreams.ReadStream

|

||||

WriteStream = legStreams.WriteStream

|

||||

}

|

||||

|

||||

var fs$ReadStream = fs.ReadStream

|

||||

ReadStream.prototype = Object.create(fs$ReadStream.prototype)

|

||||

ReadStream.prototype.open = ReadStream$open

|

||||

|

||||

var fs$WriteStream = fs.WriteStream

|

||||

WriteStream.prototype = Object.create(fs$WriteStream.prototype)

|

||||

WriteStream.prototype.open = WriteStream$open

|

||||

|

||||

fs.ReadStream = ReadStream

|

||||

fs.WriteStream = WriteStream

|

||||

|

||||

function ReadStream (path, options) {

|

||||

if (this instanceof ReadStream)

|

||||

return fs$ReadStream.apply(this, arguments), this

|

||||

else

|

||||

return ReadStream.apply(Object.create(ReadStream.prototype), arguments)

|

||||

}

|

||||

|

||||

function ReadStream$open () {

|

||||

var that = this

|

||||

open(that.path, that.flags, that.mode, function (err, fd) {

|

||||

if (err) {

|

||||

if (that.autoClose)

|

||||

that.destroy()

|

||||

|

||||

that.emit('error', err)

|

||||

} else {

|

||||

that.fd = fd

|

||||

that.emit('open', fd)

|

||||

that.read()

|

||||

}

|

||||

})

|

||||

}

|

||||

|

||||

function WriteStream (path, options) {

|

||||

if (this instanceof WriteStream)

|

||||

return fs$WriteStream.apply(this, arguments), this

|

||||

else

|

||||

return WriteStream.apply(Object.create(WriteStream.prototype), arguments)

|

||||

}

|

||||

|

||||

function WriteStream$open () {

|

||||

var that = this

|

||||

open(that.path, that.flags, that.mode, function (err, fd) {

|

||||

if (err) {

|

||||

that.destroy()

|

||||

that.emit('error', err)

|

||||

} else {

|

||||

that.fd = fd

|

||||

that.emit('open', fd)

|

||||

}

|

||||

})

|

||||

}

|

||||

|

||||

function createReadStream (path, options) {

|

||||

return new ReadStream(path, options)

|

||||

}

|

||||

|

||||

function createWriteStream (path, options) {

|

||||

return new WriteStream(path, options)

|

||||

}

|

||||

|

||||

var fs$open = fs.open

|

||||

fs.open = open

|

||||

function open (path, flags, mode, cb) {

|

||||

if (typeof mode === 'function')

|

||||

cb = mode, mode = null

|

||||

|

||||

return go$open(path, flags, mode, cb)

|

||||

|

||||

function go$open (path, flags, mode, cb) {

|

||||

return fs$open(path, flags, mode, function (err, fd) {

|

||||

if (err && (err.code === 'EMFILE' || err.code === 'ENFILE'))

|

||||

enqueue([go$open, [path, flags, mode, cb]])

|

||||

else {

|

||||

if (typeof cb === 'function')

|

||||

cb.apply(this, arguments)

|

||||

retry()

|

||||

}

|

||||

})

|

||||

}

|

||||

}

|

||||

|

||||

return fs

|

||||

}

|

||||

|

||||

function enqueue (elem) {

|

||||

debug('ENQUEUE', elem[0].name, elem[1])

|

||||

queue.push(elem)

|

||||

}

|

||||

|

||||

function retry () {

|

||||

var elem = queue.shift()

|

||||

if (elem) {

|

||||

debug('RETRY', elem[0].name, elem[1])

|

||||

elem[0].apply(null, elem[1])

|

||||

}

|

||||

}

|

||||

118

node_modules/readdirp/node_modules/graceful-fs/legacy-streams.js

generated

vendored

Normal file

118

node_modules/readdirp/node_modules/graceful-fs/legacy-streams.js

generated

vendored

Normal file

@@ -0,0 +1,118 @@

|

||||

var Stream = require('stream').Stream

|

||||

|

||||

module.exports = legacy

|

||||

|

||||

function legacy (fs) {

|

||||

return {

|

||||

ReadStream: ReadStream,

|

||||

WriteStream: WriteStream

|

||||

}

|

||||

|

||||

function ReadStream (path, options) {

|

||||

if (!(this instanceof ReadStream)) return new ReadStream(path, options);

|

||||

|

||||

Stream.call(this);

|

||||

|

||||

var self = this;

|

||||

|

||||

this.path = path;

|

||||

this.fd = null;

|

||||

this.readable = true;

|

||||

this.paused = false;

|

||||

|

||||

this.flags = 'r';

|

||||

this.mode = 438; /*=0666*/

|

||||

this.bufferSize = 64 * 1024;

|

||||

|

||||

options = options || {};

|

||||

|

||||

// Mixin options into this

|

||||

var keys = Object.keys(options);

|

||||

for (var index = 0, length = keys.length; index < length; index++) {

|

||||

var key = keys[index];

|

||||

this[key] = options[key];

|

||||

}

|

||||

|

||||

if (this.encoding) this.setEncoding(this.encoding);

|

||||

|

||||

if (this.start !== undefined) {

|

||||

if ('number' !== typeof this.start) {

|

||||

throw TypeError('start must be a Number');

|

||||

}

|

||||

if (this.end === undefined) {

|

||||

this.end = Infinity;

|

||||

} else if ('number' !== typeof this.end) {

|

||||

throw TypeError('end must be a Number');

|

||||

}

|

||||

|

||||

if (this.start > this.end) {

|

||||

throw new Error('start must be <= end');

|

||||

}

|

||||

|

||||

this.pos = this.start;

|

||||

}

|

||||

|

||||

if (this.fd !== null) {

|

||||

process.nextTick(function() {

|

||||

self._read();

|

||||

});

|

||||

return;

|

||||

}

|

||||

|

||||

fs.open(this.path, this.flags, this.mode, function (err, fd) {

|

||||

if (err) {

|

||||

self.emit('error', err);

|

||||

self.readable = false;

|

||||

return;

|

||||

}

|

||||

|

||||

self.fd = fd;

|

||||

self.emit('open', fd);

|

||||

self._read();

|

||||

})

|

||||

}

|

||||

|

||||

function WriteStream (path, options) {

|

||||

if (!(this instanceof WriteStream)) return new WriteStream(path, options);

|

||||

|

||||

Stream.call(this);

|

||||

|

||||

this.path = path;

|

||||

this.fd = null;

|

||||

this.writable = true;

|

||||

|

||||

this.flags = 'w';

|

||||

this.encoding = 'binary';

|

||||

this.mode = 438; /*=0666*/

|

||||

this.bytesWritten = 0;

|

||||

|

||||

options = options || {};

|

||||

|

||||

// Mixin options into this

|

||||

var keys = Object.keys(options);

|

||||

for (var index = 0, length = keys.length; index < length; index++) {

|

||||

var key = keys[index];

|

||||

this[key] = options[key];

|

||||

}

|

||||

|

||||

if (this.start !== undefined) {

|

||||

if ('number' !== typeof this.start) {

|

||||

throw TypeError('start must be a Number');

|

||||

}

|

||||

if (this.start < 0) {

|

||||

throw new Error('start must be >= zero');

|

||||

}

|

||||

|

||||

this.pos = this.start;

|

||||

}

|

||||

|

||||

this.busy = false;

|

||||

this._queue = [];

|

||||

|

||||

if (this.fd === null) {

|

||||

this._open = fs.open;

|

||||

this._queue.push([this._open, this.path, this.flags, this.mode, undefined]);

|

||||

this.flush();

|

||||

}

|

||||

}

|

||||

}

|

||||

111

node_modules/readdirp/node_modules/graceful-fs/package.json

generated

vendored

Normal file

111

node_modules/readdirp/node_modules/graceful-fs/package.json

generated

vendored

Normal file

@@ -0,0 +1,111 @@

|

||||

{

|

||||

"_args": [

|

||||

[

|

||||

{

|

||||

"raw": "graceful-fs@^4.1.2",

|

||||

"scope": null,

|

||||

"escapedName": "graceful-fs",

|

||||

"name": "graceful-fs",

|

||||

"rawSpec": "^4.1.2",

|

||||

"spec": ">=4.1.2 <5.0.0",

|

||||

"type": "range"

|

||||

},

|

||||

"C:\\Users\\chvra\\Documents\\angular-play\\nodeRest\\node_modules\\readdirp"

|

||||

]

|

||||

],

|

||||

"_from": "graceful-fs@>=4.1.2 <5.0.0",

|

||||

"_id": "graceful-fs@4.1.11",

|

||||

"_inCache": true,

|

||||

"_location": "/readdirp/graceful-fs",

|

||||

"_nodeVersion": "6.5.0",

|

||||

"_npmOperationalInternal": {

|

||||

"host": "packages-18-east.internal.npmjs.com",

|

||||

"tmp": "tmp/graceful-fs-4.1.11.tgz_1479843029430_0.2122855328489095"

|

||||

},

|

||||

"_npmUser": {

|

||||

"name": "isaacs",

|

||||

"email": "i@izs.me"

|

||||

},

|

||||

"_npmVersion": "3.10.9",

|

||||

"_phantomChildren": {},

|

||||

"_requested": {

|

||||

"raw": "graceful-fs@^4.1.2",

|

||||

"scope": null,

|

||||

"escapedName": "graceful-fs",

|

||||

"name": "graceful-fs",

|

||||

"rawSpec": "^4.1.2",

|

||||

"spec": ">=4.1.2 <5.0.0",

|

||||

"type": "range"

|

||||

},

|

||||

"_requiredBy": [

|

||||

"/readdirp"

|

||||

],

|

||||

"_resolved": "https://registry.npmjs.org/graceful-fs/-/graceful-fs-4.1.11.tgz",

|

||||

"_shasum": "0e8bdfe4d1ddb8854d64e04ea7c00e2a026e5658",

|

||||

"_shrinkwrap": null,

|

||||

"_spec": "graceful-fs@^4.1.2",

|

||||

"_where": "C:\\Users\\chvra\\Documents\\angular-play\\nodeRest\\node_modules\\readdirp",

|

||||

"bugs": {

|

||||

"url": "https://github.com/isaacs/node-graceful-fs/issues"

|

||||

},

|

||||

"dependencies": {},

|

||||

"description": "A drop-in replacement for fs, making various improvements.",

|

||||

"devDependencies": {

|

||||

"mkdirp": "^0.5.0",

|

||||

"rimraf": "^2.2.8",

|

||||

"tap": "^5.4.2"

|

||||

},

|

||||

"directories": {

|

||||

"test": "test"

|

||||

},

|

||||

"dist": {

|

||||

"shasum": "0e8bdfe4d1ddb8854d64e04ea7c00e2a026e5658",

|

||||

"tarball": "https://registry.npmjs.org/graceful-fs/-/graceful-fs-4.1.11.tgz"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=0.4.0"

|

||||

},

|

||||

"files": [

|

||||

"fs.js",

|

||||

"graceful-fs.js",

|

||||

"legacy-streams.js",

|

||||

"polyfills.js"

|

||||

],

|

||||

"gitHead": "65cf80d1fd3413b823c16c626c1e7c326452bee5",

|

||||

"homepage": "https://github.com/isaacs/node-graceful-fs#readme",

|

||||

"keywords": [

|

||||

"fs",

|

||||

"module",

|

||||

"reading",

|

||||

"retry",

|

||||

"retries",

|

||||

"queue",

|

||||

"error",

|

||||

"errors",

|

||||

"handling",

|

||||

"EMFILE",

|

||||

"EAGAIN",

|

||||

"EINVAL",

|

||||

"EPERM",

|

||||

"EACCESS"

|

||||

],

|

||||

"license": "ISC",

|

||||

"main": "graceful-fs.js",

|

||||

"maintainers": [

|

||||

{

|

||||

"name": "isaacs",

|

||||

"email": "i@izs.me"

|

||||

}

|

||||

],

|

||||

"name": "graceful-fs",

|

||||

"optionalDependencies": {},

|

||||

"readme": "ERROR: No README data found!",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

"url": "git+https://github.com/isaacs/node-graceful-fs.git"

|

||||

},

|

||||

"scripts": {

|

||||

"test": "node test.js | tap -"

|

||||

},

|

||||

"version": "4.1.11"

|

||||

}

|

||||

330

node_modules/readdirp/node_modules/graceful-fs/polyfills.js

generated

vendored

Normal file

330

node_modules/readdirp/node_modules/graceful-fs/polyfills.js

generated

vendored

Normal file

@@ -0,0 +1,330 @@

|

||||

var fs = require('./fs.js')

|

||||

var constants = require('constants')

|

||||

|

||||

var origCwd = process.cwd

|

||||

var cwd = null

|

||||

|

||||

var platform = process.env.GRACEFUL_FS_PLATFORM || process.platform

|

||||

|

||||

process.cwd = function() {

|

||||

if (!cwd)

|

||||

cwd = origCwd.call(process)

|

||||

return cwd

|

||||

}

|

||||

try {

|

||||

process.cwd()

|

||||

} catch (er) {}

|

||||

|

||||

var chdir = process.chdir

|

||||

process.chdir = function(d) {

|

||||

cwd = null

|

||||

chdir.call(process, d)

|

||||

}

|

||||

|

||||

module.exports = patch

|

||||

|

||||

function patch (fs) {

|

||||

// (re-)implement some things that are known busted or missing.

|

||||

|

||||

// lchmod, broken prior to 0.6.2

|

||||

// back-port the fix here.

|

||||

if (constants.hasOwnProperty('O_SYMLINK') &&

|

||||

process.version.match(/^v0\.6\.[0-2]|^v0\.5\./)) {

|

||||

patchLchmod(fs)

|

||||

}

|

||||

|

||||

// lutimes implementation, or no-op

|

||||

if (!fs.lutimes) {

|

||||

patchLutimes(fs)

|

||||

}

|

||||

|

||||

// https://github.com/isaacs/node-graceful-fs/issues/4

|

||||

// Chown should not fail on einval or eperm if non-root.

|

||||

// It should not fail on enosys ever, as this just indicates

|

||||

// that a fs doesn't support the intended operation.

|

||||

|

||||

fs.chown = chownFix(fs.chown)

|

||||

fs.fchown = chownFix(fs.fchown)

|

||||

fs.lchown = chownFix(fs.lchown)

|

||||

|

||||

fs.chmod = chmodFix(fs.chmod)

|

||||

fs.fchmod = chmodFix(fs.fchmod)

|

||||

fs.lchmod = chmodFix(fs.lchmod)

|

||||

|

||||

fs.chownSync = chownFixSync(fs.chownSync)

|

||||

fs.fchownSync = chownFixSync(fs.fchownSync)

|

||||

fs.lchownSync = chownFixSync(fs.lchownSync)

|

||||

|

||||

fs.chmodSync = chmodFixSync(fs.chmodSync)

|

||||

fs.fchmodSync = chmodFixSync(fs.fchmodSync)

|

||||

fs.lchmodSync = chmodFixSync(fs.lchmodSync)

|

||||

|

||||

fs.stat = statFix(fs.stat)

|

||||

fs.fstat = statFix(fs.fstat)

|

||||

fs.lstat = statFix(fs.lstat)

|

||||

|

||||

fs.statSync = statFixSync(fs.statSync)

|

||||

fs.fstatSync = statFixSync(fs.fstatSync)

|

||||

fs.lstatSync = statFixSync(fs.lstatSync)

|

||||

|

||||

// if lchmod/lchown do not exist, then make them no-ops

|

||||

if (!fs.lchmod) {

|

||||

fs.lchmod = function (path, mode, cb) {

|

||||

if (cb) process.nextTick(cb)

|

||||

}

|

||||

fs.lchmodSync = function () {}

|

||||

}

|

||||

if (!fs.lchown) {

|

||||

fs.lchown = function (path, uid, gid, cb) {

|

||||

if (cb) process.nextTick(cb)

|

||||

}

|

||||

fs.lchownSync = function () {}

|

||||

}

|

||||

|

||||

// on Windows, A/V software can lock the directory, causing this

|

||||

// to fail with an EACCES or EPERM if the directory contains newly

|

||||

// created files. Try again on failure, for up to 60 seconds.

|

||||

|

||||

// Set the timeout this long because some Windows Anti-Virus, such as Parity

|

||||

// bit9, may lock files for up to a minute, causing npm package install

|

||||

// failures. Also, take care to yield the scheduler. Windows scheduling gives

|

||||

// CPU to a busy looping process, which can cause the program causing the lock

|

||||

// contention to be starved of CPU by node, so the contention doesn't resolve.

|

||||

if (platform === "win32") {

|

||||

fs.rename = (function (fs$rename) { return function (from, to, cb) {

|

||||

var start = Date.now()

|

||||

var backoff = 0;

|

||||

fs$rename(from, to, function CB (er) {

|

||||

if (er

|

||||

&& (er.code === "EACCES" || er.code === "EPERM")

|

||||

&& Date.now() - start < 60000) {

|

||||

setTimeout(function() {

|

||||

fs.stat(to, function (stater, st) {

|

||||

if (stater && stater.code === "ENOENT")

|

||||

fs$rename(from, to, CB);

|

||||

else

|

||||

cb(er)

|

||||

})

|

||||

}, backoff)

|

||||

if (backoff < 100)

|

||||

backoff += 10;

|

||||

return;

|

||||

}

|

||||

if (cb) cb(er)

|

||||

})

|

||||

}})(fs.rename)

|

||||

}

|

||||

|

||||

// if read() returns EAGAIN, then just try it again.

|

||||

fs.read = (function (fs$read) { return function (fd, buffer, offset, length, position, callback_) {

|

||||

var callback

|

||||

if (callback_ && typeof callback_ === 'function') {

|

||||

var eagCounter = 0

|

||||

callback = function (er, _, __) {

|

||||

if (er && er.code === 'EAGAIN' && eagCounter < 10) {

|

||||

eagCounter ++

|

||||

return fs$read.call(fs, fd, buffer, offset, length, position, callback)

|

||||

}

|

||||

callback_.apply(this, arguments)

|

||||

}

|

||||

}

|

||||

return fs$read.call(fs, fd, buffer, offset, length, position, callback)

|

||||

}})(fs.read)

|

||||

|

||||

fs.readSync = (function (fs$readSync) { return function (fd, buffer, offset, length, position) {

|

||||

var eagCounter = 0

|

||||

while (true) {

|

||||

try {

|

||||

return fs$readSync.call(fs, fd, buffer, offset, length, position)

|

||||

} catch (er) {

|

||||

if (er.code === 'EAGAIN' && eagCounter < 10) {

|

||||

eagCounter ++

|

||||

continue

|

||||

}

|

||||

throw er

|

||||

}

|

||||

}

|

||||

}})(fs.readSync)

|

||||

}

|

||||

|

||||

function patchLchmod (fs) {

|

||||

fs.lchmod = function (path, mode, callback) {

|

||||

fs.open( path

|

||||

, constants.O_WRONLY | constants.O_SYMLINK

|

||||

, mode

|

||||

, function (err, fd) {

|

||||

if (err) {

|

||||

if (callback) callback(err)

|

||||

return

|

||||

}

|

||||

// prefer to return the chmod error, if one occurs,

|

||||

// but still try to close, and report closing errors if they occur.

|

||||

fs.fchmod(fd, mode, function (err) {

|

||||

fs.close(fd, function(err2) {

|

||||

if (callback) callback(err || err2)

|

||||

})

|

||||

})

|

||||

})

|

||||

}

|

||||

|

||||

fs.lchmodSync = function (path, mode) {

|

||||

var fd = fs.openSync(path, constants.O_WRONLY | constants.O_SYMLINK, mode)

|

||||

|

||||

// prefer to return the chmod error, if one occurs,

|

||||

// but still try to close, and report closing errors if they occur.

|

||||

var threw = true

|

||||

var ret

|

||||

try {

|

||||

ret = fs.fchmodSync(fd, mode)

|

||||

threw = false

|

||||

} finally {

|

||||

if (threw) {

|

||||

try {

|

||||

fs.closeSync(fd)

|

||||

} catch (er) {}

|

||||

} else {

|

||||

fs.closeSync(fd)

|

||||

}

|

||||

}

|

||||

return ret

|

||||

}

|

||||

}

|

||||

|

||||

function patchLutimes (fs) {

|

||||

if (constants.hasOwnProperty("O_SYMLINK")) {

|

||||

fs.lutimes = function (path, at, mt, cb) {

|

||||

fs.open(path, constants.O_SYMLINK, function (er, fd) {

|

||||

if (er) {

|

||||

if (cb) cb(er)

|

||||

return

|

||||

}

|

||||

fs.futimes(fd, at, mt, function (er) {

|

||||

fs.close(fd, function (er2) {

|

||||

if (cb) cb(er || er2)

|

||||

})

|

||||

})

|

||||

})

|

||||

}

|

||||

|

||||

fs.lutimesSync = function (path, at, mt) {

|

||||

var fd = fs.openSync(path, constants.O_SYMLINK)

|

||||

var ret

|

||||

var threw = true

|

||||

try {

|

||||

ret = fs.futimesSync(fd, at, mt)

|

||||

threw = false

|

||||

} finally {

|

||||

if (threw) {

|

||||

try {

|

||||

fs.closeSync(fd)

|

||||

} catch (er) {}

|

||||

} else {

|

||||

fs.closeSync(fd)

|

||||

}

|

||||

}

|

||||

return ret

|

||||

}

|

||||

|

||||

} else {

|

||||

fs.lutimes = function (_a, _b, _c, cb) { if (cb) process.nextTick(cb) }

|

||||

fs.lutimesSync = function () {}

|

||||

}

|

||||

}

|

||||

|

||||

function chmodFix (orig) {

|

||||

if (!orig) return orig

|

||||

return function (target, mode, cb) {

|

||||

return orig.call(fs, target, mode, function (er) {

|

||||

if (chownErOk(er)) er = null

|

||||

if (cb) cb.apply(this, arguments)

|

||||

})

|

||||

}

|

||||

}

|

||||

|

||||

function chmodFixSync (orig) {

|

||||

if (!orig) return orig

|

||||

return function (target, mode) {

|

||||

try {

|

||||

return orig.call(fs, target, mode)

|

||||

} catch (er) {

|

||||

if (!chownErOk(er)) throw er

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

function chownFix (orig) {

|

||||

if (!orig) return orig

|

||||

return function (target, uid, gid, cb) {

|

||||

return orig.call(fs, target, uid, gid, function (er) {

|

||||

if (chownErOk(er)) er = null

|

||||

if (cb) cb.apply(this, arguments)

|

||||

})

|

||||

}

|

||||

}

|

||||

|

||||

function chownFixSync (orig) {

|

||||

if (!orig) return orig

|

||||

return function (target, uid, gid) {

|

||||

try {

|

||||

return orig.call(fs, target, uid, gid)

|

||||

} catch (er) {

|

||||

if (!chownErOk(er)) throw er

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

function statFix (orig) {

|

||||

if (!orig) return orig

|

||||

// Older versions of Node erroneously returned signed integers for

|

||||

// uid + gid.

|

||||

return function (target, cb) {

|

||||

return orig.call(fs, target, function (er, stats) {

|

||||

if (!stats) return cb.apply(this, arguments)

|

||||

if (stats.uid < 0) stats.uid += 0x100000000

|

||||

if (stats.gid < 0) stats.gid += 0x100000000

|

||||

if (cb) cb.apply(this, arguments)

|

||||

})

|

||||

}

|

||||

}

|

||||

|

||||

function statFixSync (orig) {

|

||||

if (!orig) return orig

|

||||

// Older versions of Node erroneously returned signed integers for

|

||||

// uid + gid.

|

||||

return function (target) {

|

||||

var stats = orig.call(fs, target)

|

||||

if (stats.uid < 0) stats.uid += 0x100000000

|

||||

if (stats.gid < 0) stats.gid += 0x100000000

|

||||

return stats;

|

||||

}

|

||||

}

|

||||

|

||||

// ENOSYS means that the fs doesn't support the op. Just ignore

|

||||

// that, because it doesn't matter.

|

||||

//

|

||||

// if there's no getuid, or if getuid() is something other

|

||||

// than 0, and the error is EINVAL or EPERM, then just ignore

|

||||

// it.

|

||||

//

|

||||

// This specific case is a silent failure in cp, install, tar,

|

||||

// and most other unix tools that manage permissions.

|

||||

//

|

||||

// When running as root, or if other types of errors are

|

||||

// encountered, then it's strict.

|

||||

function chownErOk (er) {

|

||||

if (!er)

|

||||

return true

|

||||

|

||||

if (er.code === "ENOSYS")

|

||||

return true

|

||||

|

||||

var nonroot = !process.getuid || process.getuid() !== 0

|

||||

if (nonroot) {

|

||||

if (er.code === "EINVAL" || er.code === "EPERM")

|

||||

return true

|

||||

}

|

||||

|

||||

return false

|

||||

}

|

||||

1

node_modules/readdirp/node_modules/isarray/.npmignore

generated

vendored

Normal file

1

node_modules/readdirp/node_modules/isarray/.npmignore

generated

vendored

Normal file

@@ -0,0 +1 @@

|

||||

node_modules

|

||||

4

node_modules/readdirp/node_modules/isarray/.travis.yml

generated

vendored

Normal file

4

node_modules/readdirp/node_modules/isarray/.travis.yml

generated

vendored

Normal file

@@ -0,0 +1,4 @@

|

||||

language: node_js

|

||||

node_js:

|

||||

- "0.8"

|

||||

- "0.10"

|

||||

6

node_modules/readdirp/node_modules/isarray/Makefile

generated

vendored

Normal file

6

node_modules/readdirp/node_modules/isarray/Makefile